I tested 25 AI agent frameworks this year, and these are the 10 that were fast, reliable, and ready for real-world use in 2026.

What is an AI agent framework?

An AI agent framework is a development environment that provides tools, libraries, and predefined components to simplify the building, deployment, and management of autonomous AI agents.

Instead of building everything from scratch, these frameworks provide the building blocks like memory, state management, tool access, and API integrations, so agents can interact with users, fetch data, or execute tasks independently.

In simple terms, it allows an AI assistant to understand context, use external tools, and work across multiple steps or conversations.

My top 10 AI agent frameworks in 2026: TL;DR

- Lindy: Best no-code agent framework for business users

- LangChain: Best for custom LLM workflows

- CrewAI: Best for multi-agent orchestration

- OpenAI Responses API: Best for GPT native applications

- AutoGen: Best for conversation-driven agents

- LlamaIndex: Best offering of prepackaged document agents

- LangGraph: Best for DAG-based agents

- Haystack: Best for RAG and multimodal AI

- FastAgency: Best for high-speed inference and production scaling

- Rasa: Best for chatbots and voice assistants

Top 10 AI agent frameworks in 2026

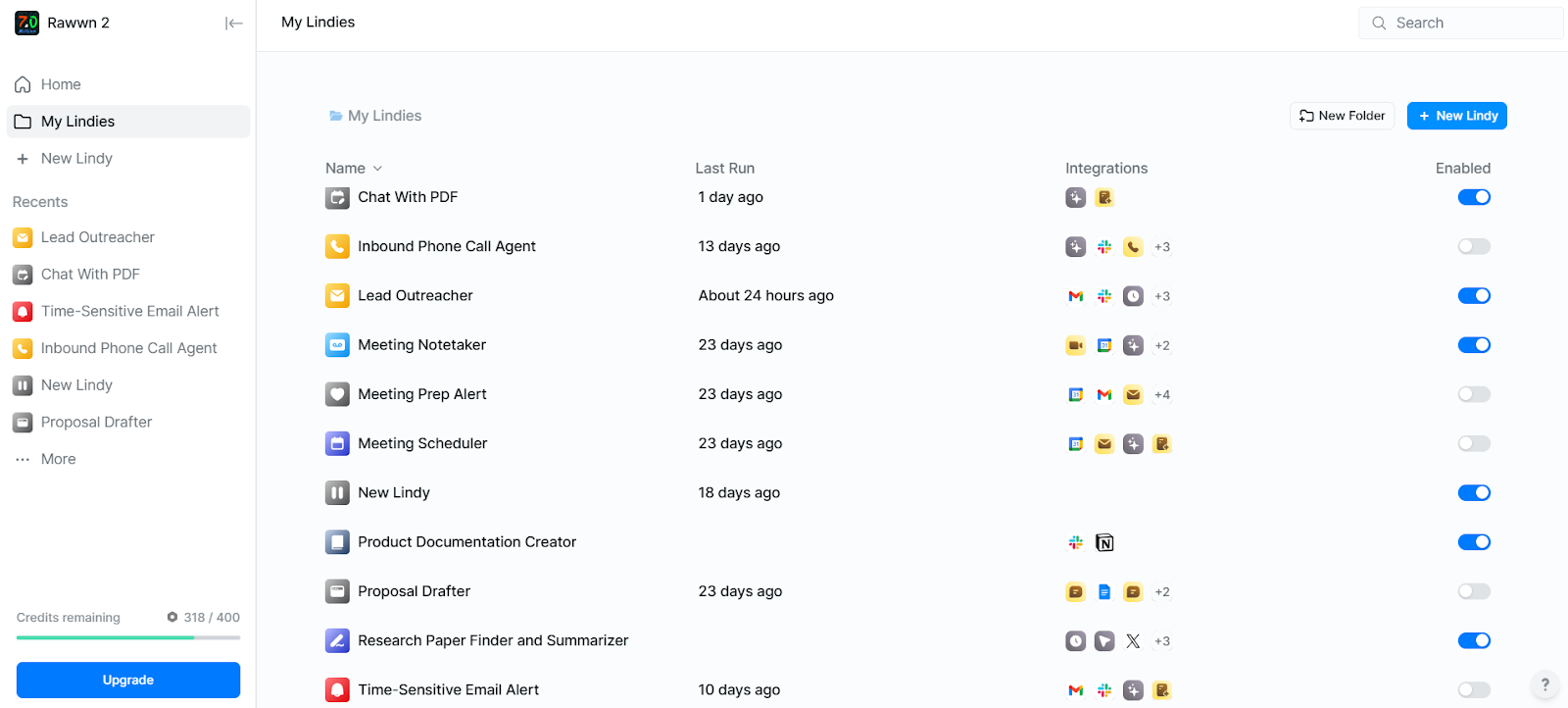

1. Lindy: Best no-code AI agent framework for business users

What does it do? Lindy helps teams build, deploy, and manage AI agents that automate everyday business tasks without coding.

Who is it for? Teams that want to eliminate repetitive work and create intelligent workflows without relying on developers.

Lindy automates business tasks through AI agents built without code. Describe what needs to happen (answer support emails, update CRM records, summarize meetings), and Lindy generates the workflow. The system maintains business context across tasks, so agents understand how actions connect.

The visual builder connects popular business tools through drag-and-drop interfaces. Workflows support conditional logic, loops, and persistent memory. Agents retain information across sessions, referencing past interactions when making decisions.

Multiple agents can work on the same process. One agent qualifies inbound leads, passes qualified contacts to a second agent who drafts outreach, while a third logs activity in your CRM. This delegation happens automatically based on rules you set.

Lindy includes human-in-the-loop controls. Agents pause for approval before taking specified actions, giving you oversight on sensitive tasks like payment processing or contract modifications. You can even deploy agents as Slack bots, website widgets, or voice assistants. The same underlying workflow adapts to different interfaces without separate configurations.

Lindy supports the latest models like GPT-5, Claude Sonnet 4.5, Gemini 2.5, and other models. Switch between them based on task requirements (speed vs. reasoning depth), or let Lindy route requests to the most appropriate model automatically.

Lindy Academy provides documentation, tutorials, and workflow templates for building and deploying agents. The resource library covers setup fundamentals, integration guides, and use case examples across sales, support, and operations.

Pricing

Lindy starts with a free plan offering 40 monthly tasks. Paid plans begin at $49.99/month (Pro) and scale up to $199.99/month (Business).

{{templates}}

2. LangChain: Best for custom LLM workflows

What does it do? LangChain helps developers build, manage, and deploy AI agents that can reason, fetch data, and take action across different tools.

Who is it for? Engineers and technical teams who want deep control, full visibility, and freedom to use any AI model they like.

LangChain breaks down agent development into modular pieces. Instead of wrestling with prompt handling, memory management, and tool integration separately, the framework brings them together in one place.

The LangSmith integration shows you what's happening under the hood. Every agent decision gets logged, every prompt variation can be tested side by side, and execution traces reveal exactly where things work or break.

For agents that need to think through problems step by step, LangGraph adds workflow control.

Build logic that branches based on conditions, loops through options, or chains together complex reasoning. This becomes essential when agents handle tasks with multiple decision points.

Deploy on LangChain Cloud for managed hosting, or run everything on your own servers when data privacy matters. The framework stays model-agnostic, so swapping between GPT, Claude, or other models doesn't require rebuilding your agent logic.

The modular setup means spending time upfront figuring out how pieces connect. New teams will need to work through how components interact and how to structure workflows properly. The framework trades initial simplicity for long-term flexibility.

Engineering teams building production systems where observability and customization matter more than quick setup will find the investment worthwhile.

Pricing

LangChain has a free plan. Paid plans start at $39/seat/month.

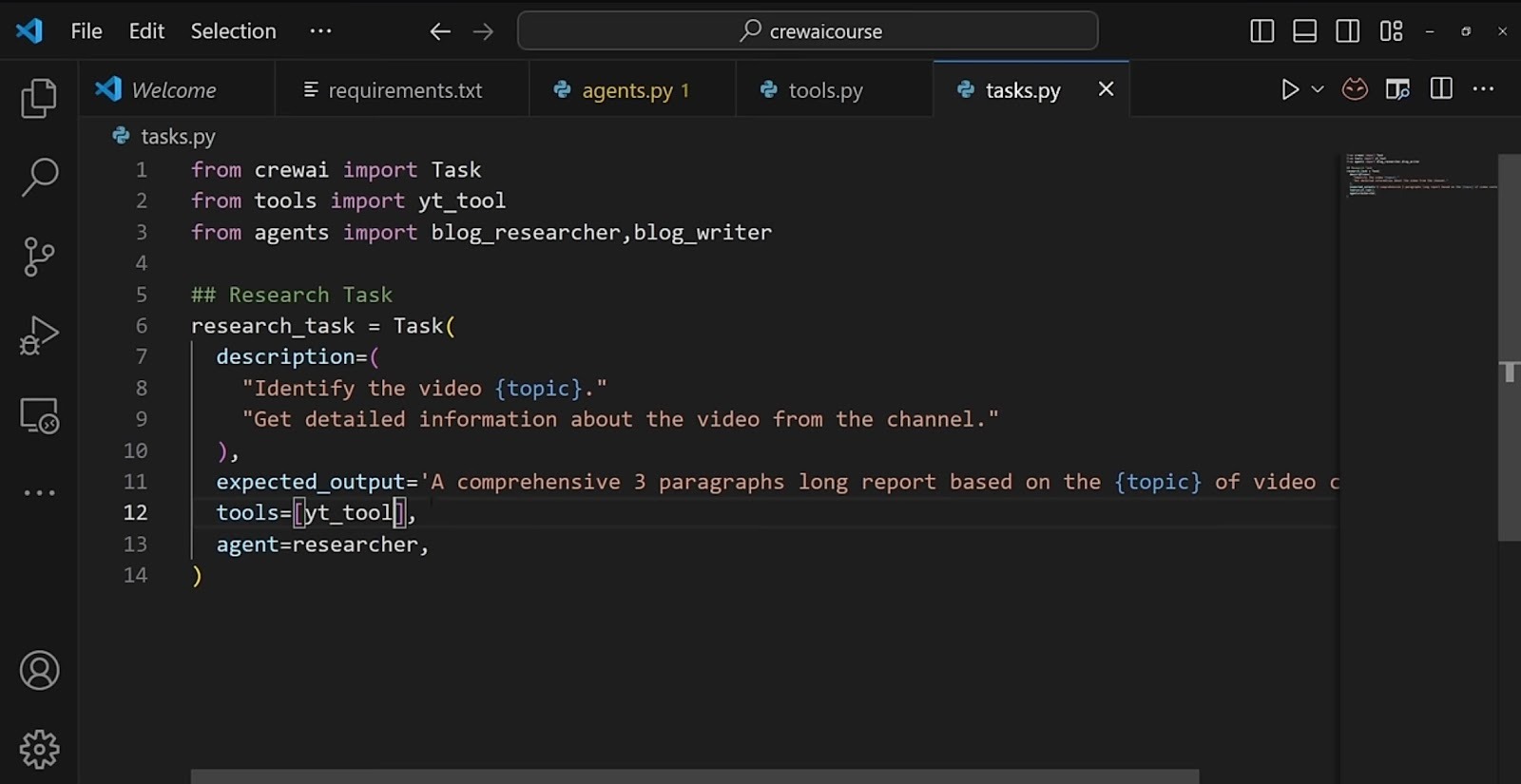

3. CrewAI: Best for multi-agent orchestration

What does it do? CrewAI helps you build and manage teams of AI agents that can plan, reason, and collaborate to complete complex workflows.

Who is it for? Organizations that want enterprise-level control over how their AI agents work together, from setup to scaling.

CrewAI orchestrates teams of AI agents that collaborate on complicated workflows. Each agent operates with its own role, memory, and reasoning capability, coordinating through a central management system.

The Agent Management Platform (AMP) handles the full lifecycle: build, test, deploy, and monitor from a single dashboard.

You can design workflows where one agent researches data, another drafts content, and a third validates output, all passing context between steps automatically.

Set up works across cloud environments, private VPCs, or distributed teams.

Once you get familiar, you’ll see how CrewAI handles state management and inter-agent communication with ease, so you focus on defining roles and logic rather than infrastructure.

CrewAI's open-source foundation gives you access to the core framework, but production-ready features require extensive integration. The initial configuration demands technical familiarity, making it less suitable for teams wanting plug-and-play automation.

The framework rewards investment. As workflows become more sophisticated, CrewAI's orchestration layer scales without requiring architectural changes.

Pricing

CrewAI offers a free plan. The paid plans start at $99/month.

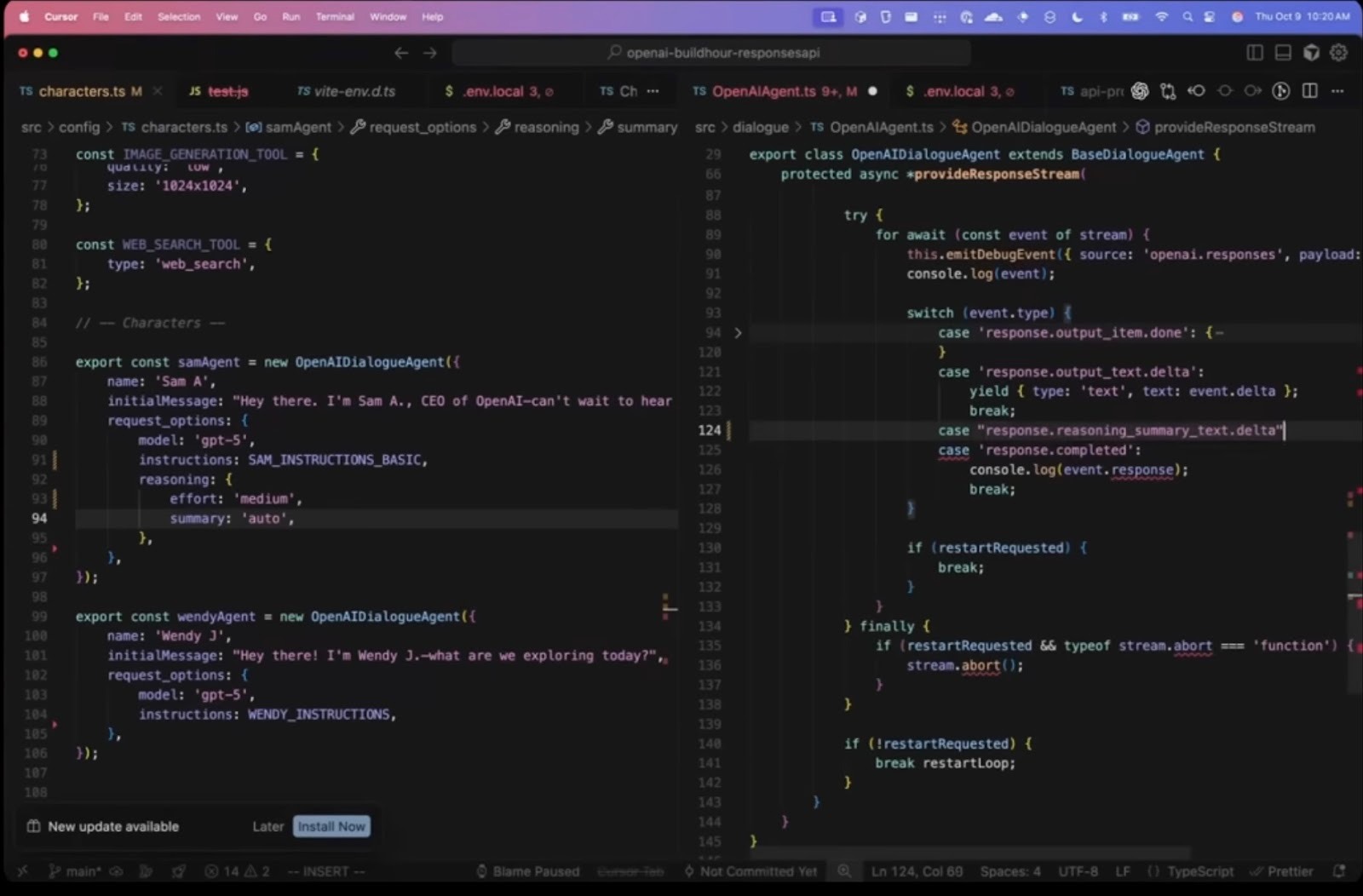

4. OpenAI Responses API: Best for building GPT-native applications

What does it do? The Responses API lets you create dynamic AI assistants powered by models like GPT-5, complete with tools, structured outputs, and real-time interaction.

Who is it for? Developers and product teams who want to build GPT-based apps quickly without managing multiple endpoints or complex orchestration layers.

The new Responses API is OpenAI’s answer to years of developer feedback. Instead of handling separate APIs for chat, tools, and file handling, you now have one unified endpoint that can call functions, generate responses, and stream results in real time.

You define the assistant’s “personality” through simple instructions. Choose a model like GPT-5, and connect tools such as code interpreter, file search, or your own APIs. It lets you upload documents, process images, or send structured data in JSON mode in one call.

I tested the Responses API by wiring it to a local app that generated travel itineraries using GPT-5 and the file search tool. It pulled flight data, summarized options, and produced a complete plan in seconds.

Developers also get a new Run Lifecycle, which means you can handle events, partial updates, and streaming results easily.

And because the API now handles both synchronous and streaming workflows, it fits just as well in a prototype as it does in a large-scale production app.

OpenAI set out to smooth the rough edges of the old system. The bulky session handling and repeated history processing are gone.

With the new Responses API, you control exactly what context gets sent, making it faster and easier to manage. Because pricing is token-based, long or multimodal calls can add up quickly if you are not careful with context size.

Pricing

Responses API is not priced separately. Tokens are billed at the chosen language model’s input and output rates.

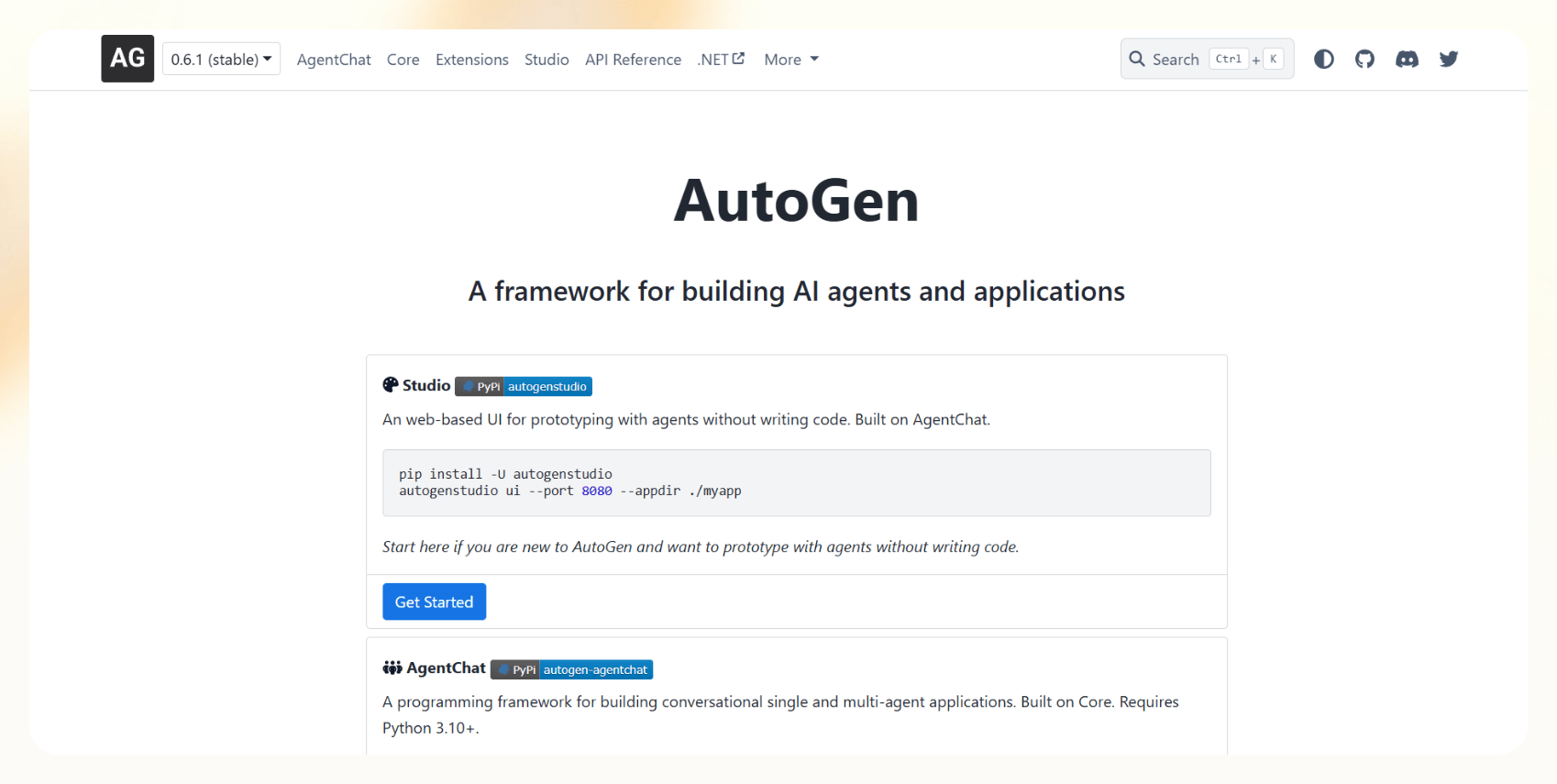

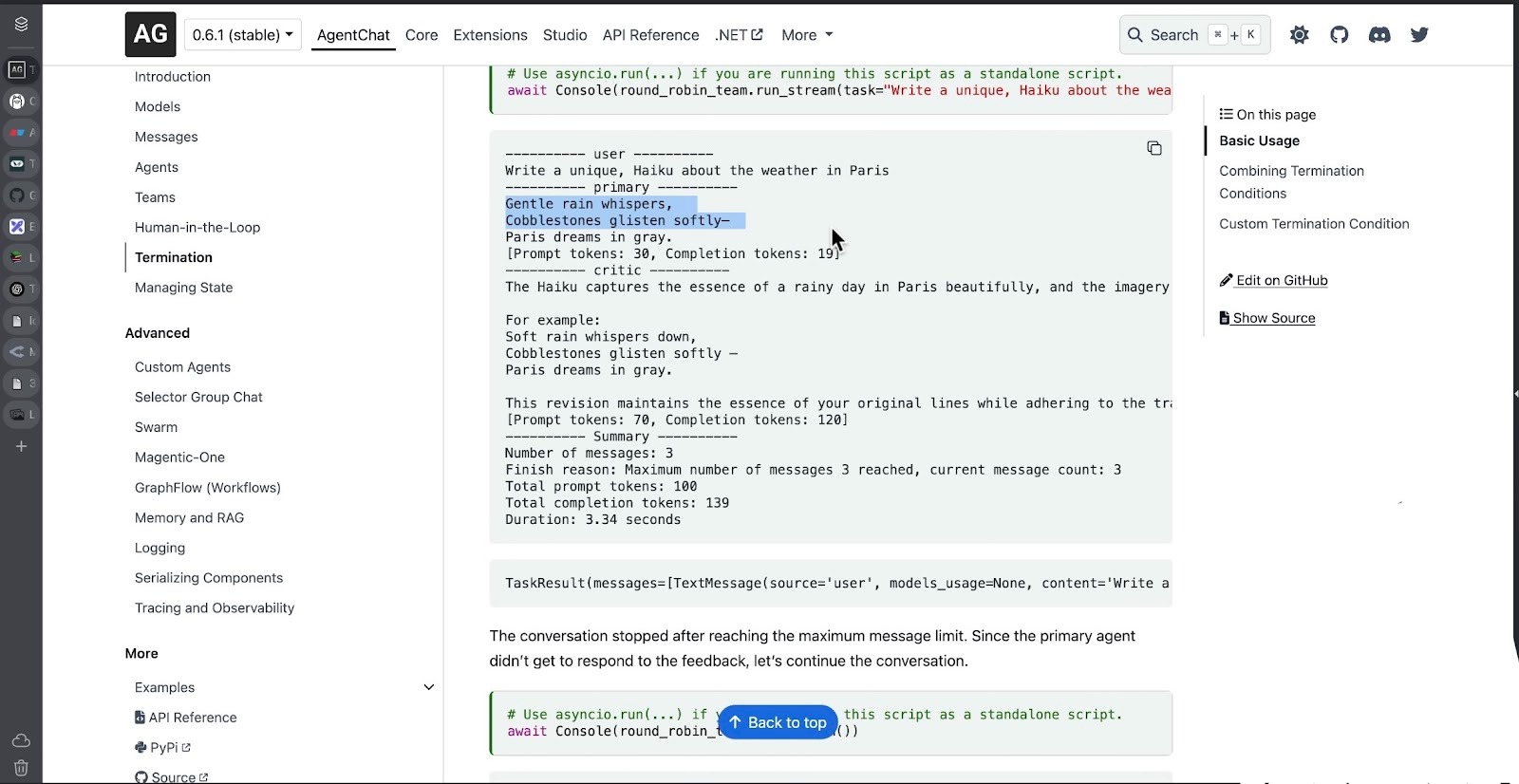

5: AutoGen: Best for conversation-driven agents

What does it do? AutoGen lets developers build and connect multiple AI agents that can talk to each other, share context, and solve complex problems together.

Who is it for? Developers and researchers who want an open, flexible way to design multi-agent systems without being tied to any single model or vendor.

AutoGen v0.4 brings meaningful performance improvements to multi-agent systems. Agents now execute tasks in parallel rather than sequentially, enabling true concurrent workflows where multiple agents reason and respond at the same time.

The framework supports custom models, tools, and memory systems.

You can swap the underlying messaging layer to match your infrastructure without rewriting agent logic. OpenTelemetry integration provides full traceability; every agent action, decision, and handoff appears in your monitoring stack.

AutoGen Studio adds a visual interface for designing agent workflows. Map out conversation patterns, tool usage, and delegation logic before writing code. This reduces iteration cycles when prototyping complex agent interactions.

The framework handles both Python and .NET implementations, though cross-language setups require additional configuration.

Running AutoGen in distributed setups requires manual work to keep the state and messages in sync. For example, if agents share data across machines, you need to handle the orchestration yourself.

AutoGen rewards technical depth. Teams comfortable with systems architecture can build sophisticated agent networks that adapt to complex business logic and scale across infrastructure boundaries.

Pricing

AutoGen is open-source and free to use under the MIT license.

6. LlamaIndex: Best offering of prepackaged agents

What does it do? LlamaIndex helps developers build AI applications faster by handling stuff like document understanding, retrieval, and workflow orchestration.

Who is it for? Developers and enterprises that rely on large amounts of unstructured data and want to turn it into something their AI agents can actually use.

LlamaIndex handles document parsing, indexing, and retrieval for AI agents working with unstructured data. The combination of LlamaCloud and the core framework covers file processing, organization, and reasoning without requiring custom preprocessing scripts.

Setup moves quickly from file upload to working queries. Drop in text files, PDFs, or scanned documents, and LlamaIndex handles parsing, chunking, and retrieval automatically.

The Workflow engine connects multi-step AI processes to external databases, RAG pipelines, or human approval steps. The event-driven architecture processes documents asynchronously, maintaining speed even with high volumes.

SDKs for Python and TypeScript include prebuilt connectors for vector databases and language models. Customize retrieval strategies, feedback loops, and how agents learn from corrections.

Finance and manufacturing teams rely on LlamaIndex for document workflows that used to eat up hours: summarizing reports, analyzing invoices, and running compliance checks on contracts.

The modular architecture requires understanding how pipelines, nodes, and document stores interact. Smaller projects may not need the framework's full capabilities, making simpler tools more appropriate.

LlamaIndex rewards investment in RAG applications and document-heavy workflows where component reusability and processing depth justify the initial learning curve.

Pricing

LlamaIndex offers a free plan. The paid plans start at $50/month (Starter) and $500/month (Professional).

7. LangGraph: Best for DAG-based agents

What does it do? LangGraph lets developers build long-running, stateful AI agents with full control over how they think, act, and recover mid-process.

Who is it for? Teams that want to design complex, custom agent workflows instead of relying on prebuilt templates.

LangGraph provides granular control over agent state, execution flow, and decision-making logic. Just ask your developers to define how agents reason through problems, when they pause for human approval, and how they handle multi-step processes.

The framework's checkpointing system preserves agent state across interruptions.

Long-running workflows, document analysis, code generation, and research synthesis can pause mid-execution and resume without losing context or progress. This persistence layer handles failures gracefully, reducing the need for manual intervention.

LangSmith integration delivers full observability. Runtime metrics, execution traces, and state visualizations show exactly how agents move through decision trees.

Streaming output displays token generation in real-time, making debugging more transparent than post-execution log analysis.

LangGraph requires architectural thinking. You wire together state management, memory systems, and approval workflows manually. The framework's rapid development cycle means older API patterns get deprecated, occasionally requiring migration work for established implementations.

Workflows that run for hours or days maintain stability without abstracting away the underlying mechanics.

Teams that need to understand and control agent behavior at each step will find the engineering investment worthwhile.

Pricing

LangGraph has a free plan. Paid plans start at $39/seat/month.

8. Haystack: Best for RAG and multimodal AI

What does it do? Haystack lets you build production-ready AI workflows that combine large language models with external tools and data sources.

Who is it for? Developers and teams that need flexible, open-source infrastructure for building RAG, conversational, or multimodal AI applications.

Haystack combines chat models, retrieval pipelines, image processing, and custom tools within a unified workflow architecture. The modular design allows mixing components from different providers: pair OpenAI for generation with Pinecone for retrieval, or combine Cohere embeddings with local image models.

Agents operate through prompt-driven templates. Define behavior by specifying prompts and attaching functions, rather than configuring complex orchestration rules.

Haystack components convert into callable tools, enabling extension without framework rewrites. It then handles multimodal workflows natively.

Your AI agents can process text documents, extract image metadata, and synthesize outputs that combine both data types. This matters for use cases like document analysis, where text and visual elements carry equal weight.

deepset Studio provides a visual pipeline builder. Drag components, wire connections, and test outputs without writing Python. Teams with mixed technical backgrounds can prototype together, then export to code when ready for production.

The modular setup takes a bit to click. You have to figure out how pipelines, nodes, and document stores fit together, which can feel like overkill if you just want something running fast.

Haystack is best suited for teams building extensive RAG and document-processing workflows. Its modular architecture supports component reuse, multimodal inputs, and scalable pipelines that justify the initial setup effort.

Pricing

Haystack is open source and free to use; pricing for deepset Cloud (the managed platform) is available upon request.

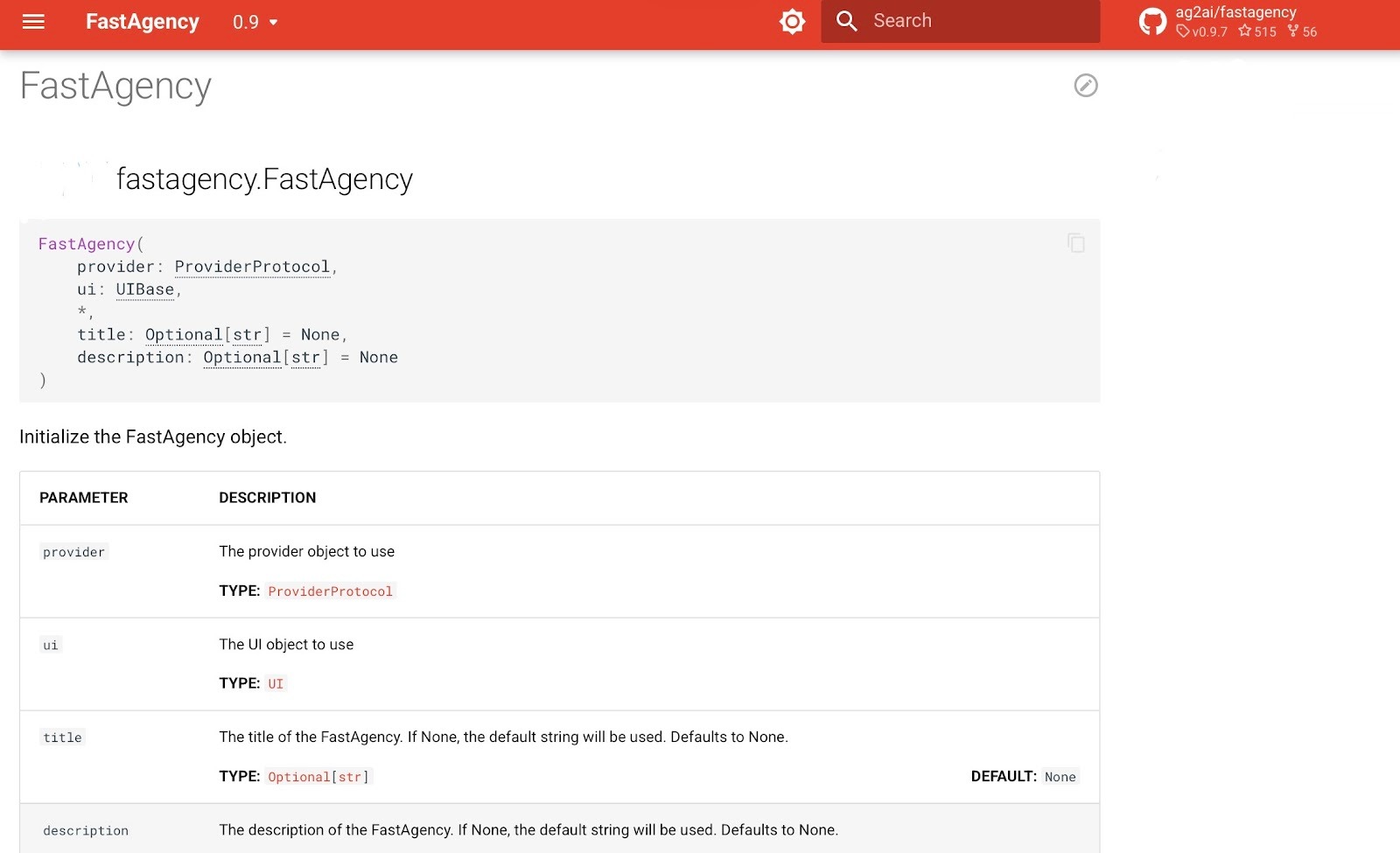

9. FastAgency: Best for high-speed inference and production scaling

What does it do? FastAgency helps developers turn their agent prototypes into production applications that run across console and web interfaces with minimal setup.

Who is it for? Freelancers, digital agencies, and small teams that want to move from Jupyter notebooks or local builds to full production systems quickly.

FastAgency converts workflows built on AG2 (an open-source agent orchestration toolkit compatible with AutoGen) into deployable applications across console, web, and distributed environments.

Write agent logic once, then run it in multiple contexts without rewriting integration code.

The framework imports OpenAPI specifications to generate API connectors automatically. Point it at a third-party service definition, and FastAgency builds the client interface and handles request formatting. This reduces manual integration work for external data sources.

The Tester Class enables automated verification of multi-agent workflows.

Just define test scenarios that simulate agent interactions, then run them in CI pipelines to catch regressions before deployment.

The CLI handles orchestration commands, making it straightforward to deploy or update agents from terminal environments.

FastAgency currently supports only the AG2 runtime. Teams using other frameworks need to migrate their agent implementations or build custom adapters. Some production deployments reveal customization constraints when scaling beyond standard use cases.

The framework prioritizes deployment velocity. Teams that iterate frequently on agent behavior and need rapid feedback cycles will benefit from the reduced configuration overhead between development and production environments.

Pricing

Pricing is available on request.

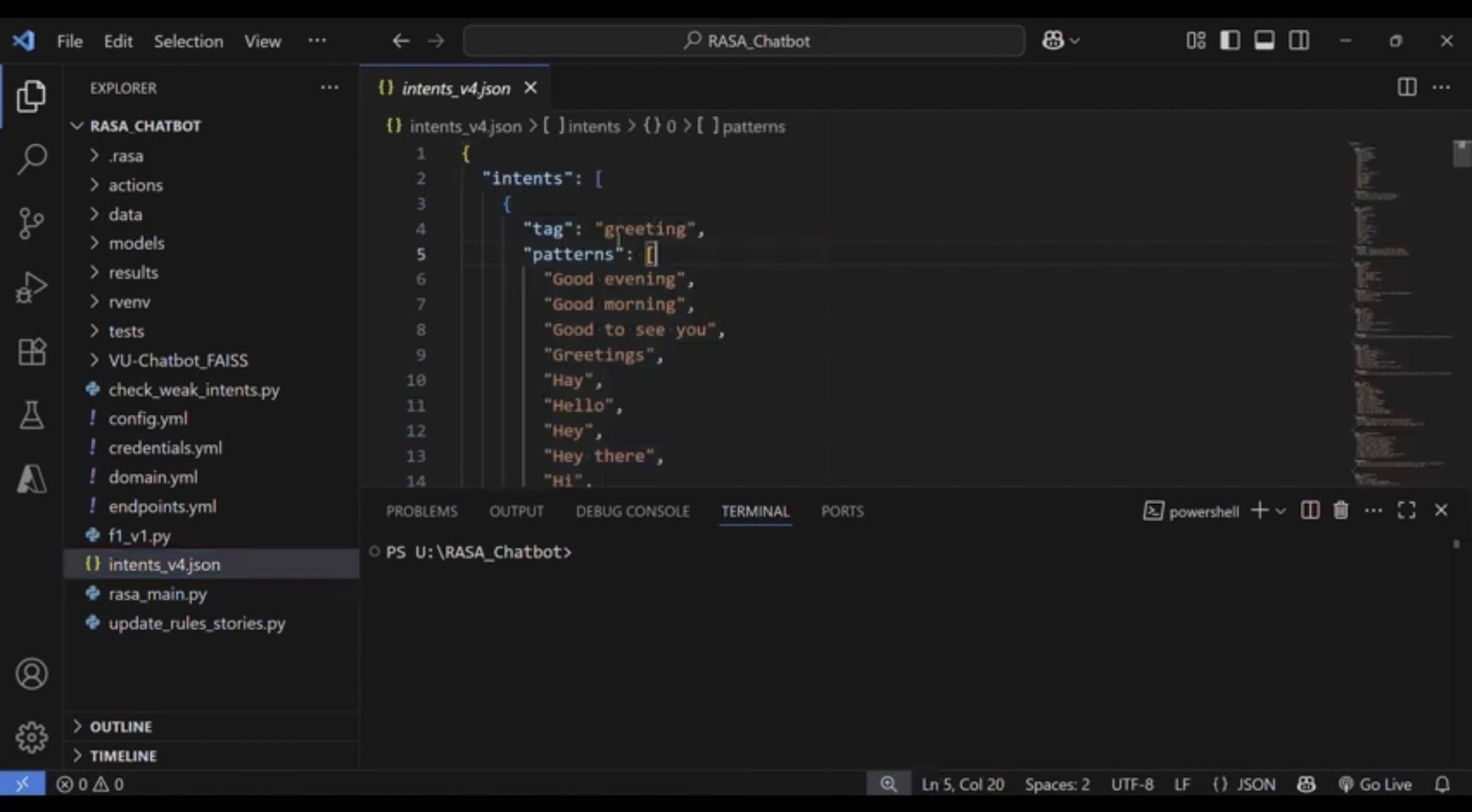

10. Rasa: Best for chatbots and voice assistants

What does it do? Rasa gives developers and teams the tools to build conversational and voice AI that’s private, customizable, and ready for production.

Who is it for? Companies and developers who want full control over their assistants.

Rasa prioritizes infrastructure ownership and customization depth. Everything runs on private infrastructure, giving teams complete control over data, model training, and conversation logic.

Rasa Studio handles conversation design through visual flow builders. Define branching paths with conditional logic, create loop structures for iterative dialogues, and set constraints that keep conversations on track.

Voice testing includes tone adjustments and real-time transcript analysis when connected to speech engines like Deepgram or Cartesia. The 2026 release adds silence detection and improved dialogue state management.

These features address common voice interaction problems where pauses or background noise disrupt conversation flow.

Rasa Pro extends the core framework with generative dialogue capabilities and multi-model orchestration.

Custom NLU training lets teams fine-tune language understanding for domain-specific terminology or regional dialects.

Setup requires infrastructure expertise. Teams handle model deployment, configure private servers, and maintain NLU training pipelines. The technical barrier is higher than hosted alternatives, but the tradeoff is complete system ownership.

Pricing

Rasa offers a Free Developer Edition. The Growth Plan starts at $35,000/year.

{{cta}}

Top 10 AI agent frameworks: At a glance

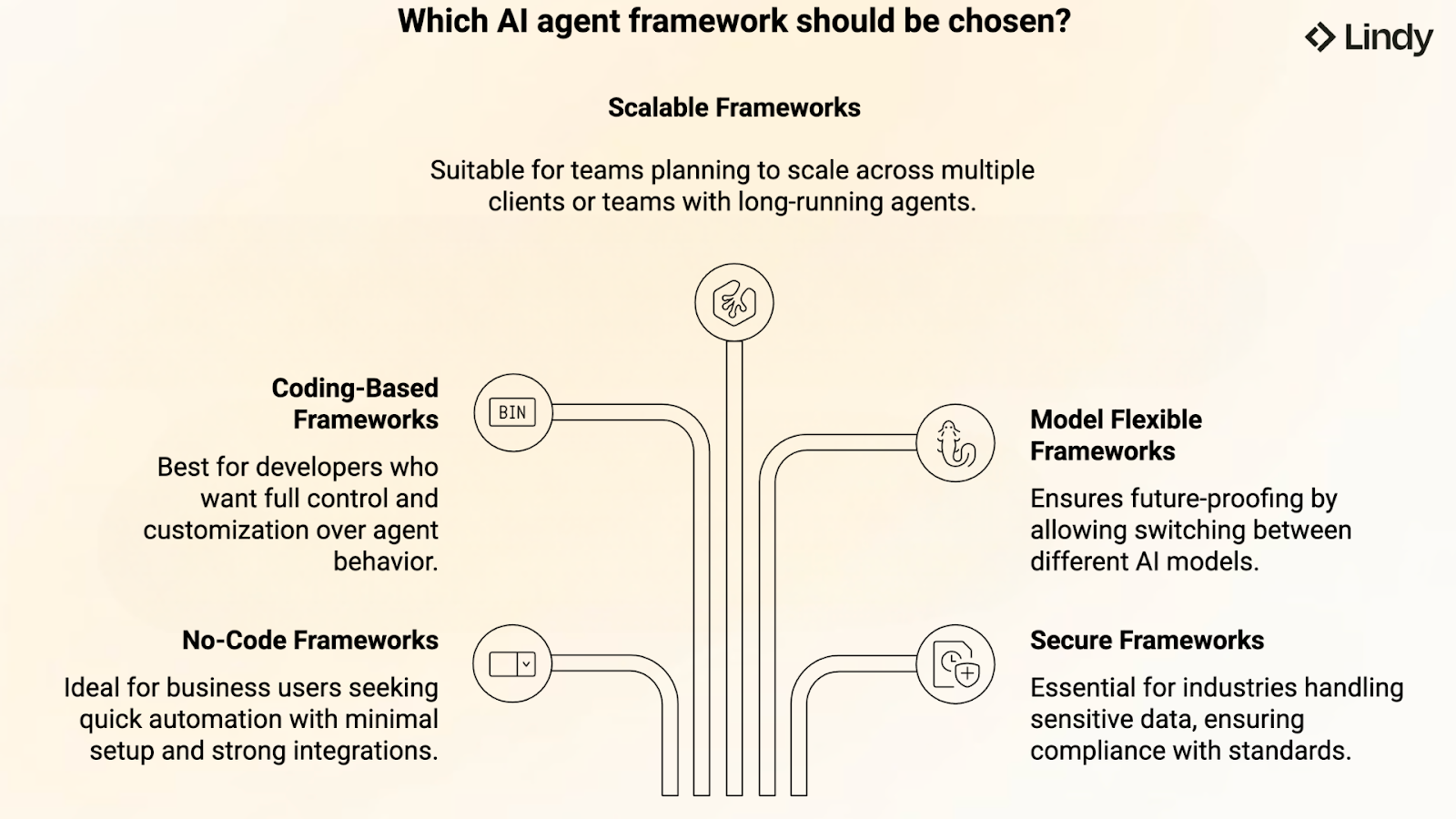

What to consider while choosing an AI agent framework

Choosing the right AI agent framework depends on what you want to build and how technical your team is. Some are built for quick automation, while others offer extensive control but require more setup.

If you’re a business user, pick a framework that handles the complexity for you and connects easily to your existing tools. Platforms like Lindy or CrewAI let you automate real work without writing code.

If you’re a developer who prefers control, frameworks like LangChain or AutoGen are better suited for your goals. They let you shape how agents think, remember, and act with as much detail as you want.

Here are a few key things to keep in mind:

- Ease of use: Check how much setup is needed. A no-code framework helps you get results faster, while a coding-based one gives more control later on.

- Integration options: Pick a framework that connects easily with your data, CRMs, and tools. Good integrations save hours of manual work.

- Scalability: If you plan to scale across teams or clients, look for frameworks that support long-running agents and enterprise deployment, like CrewAI or LangGraph.

- Model flexibility: Choose frameworks that let you switch between GPT, Claude, Gemini, and other models. It keeps your stack future-proof.

- Monitoring and debugging: As workflows grow, visibility matters. Tools like LangSmith or AutoGen’s tracing help you understand what your agents are doing.

- Security and privacy: For industries dealing with sensitive data, compliance is essential. Frameworks like Rasa and Lindy already support GDPR and SOC 2 standards.

At the end of the day, the best framework is the one that fits your workflow, and not just the one with the longest feature list.

Try Lindy: An AI agent that handles support, outreach, and automation

Lindy uses conversational AI that handles not just chat, but also lead gen, meeting notes, and customer support. It handles requests instantly and adapts to user intent with accurate replies.

Here's how Lindy goes the extra mile:

- Fast replies in your support inbox: Lindy answers customer queries in seconds, reducing wait times and missed messages.

- 24/7 agent availability for async teams: You can set Lindy agents to run 24/7 for round-the-clock support, perfect for async workflows or round-the-clock coverage.

- Support in 30+ languages: Lindy’s phone agents support over 30 languages, letting your team handle calls in new regions.

- Add Lindy to your site: Add Lindy to your site with a simple code snippet, instantly helping visitors get answers without leaving your site.

- Integrates with your tools: Lindy integrates with tools like Stripe and Intercom, helping you connect your workflows without extra setup.

- Handles high-volume requests without slowdown: Lindy handles any volume of requests and even teams up with other instances to tackle the most demanding scenarios.

- Lindy does more than chat: There’s a huge variety of Lindy automations, from content creation to coding. Check out the full Lindy templates list.

Try Lindy free and automate your first 40 tasks today.

FAQs

1. What are the best virtual machine options for running AI agents that generate and execute code with minimal latency?

The best virtual machine options for running AI agents that generate and execute code with minimal latency are AWS EC2 G5 instances, Google Cloud A2, and Azure NV-series.

Frameworks like LangChain, LangGraph, and AutoGen perform best in these GPU-backed environments since they support long-running, code-intensive, and reasoning-leaned workflows with near real-time responsiveness.

2. What voice engines integrate best with agent frameworks?

The voice engines that integrate best with AI agent frameworks are Deepgram, Cartesia, and ElevenLabs. Frameworks such as Rasa and Lindy already support these natively, while the OpenAI Responses API adds real-time streaming for conversational voice agents. Together, they make it easy to build assistants that can listen, respond, and adapt naturally.

3. What is the best low-latency LLM platform for AI agent orchestration?

The best low-latency LLM platform for AI agent orchestration is OpenAI’s GPT-4o, which delivers fast, high-quality responses with strong reasoning capabilities.

Frameworks like CrewAI, LangGraph, and FastAgency use GPT-4o to power their orchestration layers, allowing agents to coordinate tasks with minimal delay.

4. What are the top AI agent frameworks in 2026?

The top AI agent frameworks in 2026 are Lindy, LangChain, CrewAI, OpenAI Responses API, AutoGen, LlamaIndex, LangGraph, Haystack Agents, FastAgency, and Rasa. Each has a unique purpose. Lindy for no-code business workflows, LangChain for custom pipelines, CrewAI for orchestration, and Rasa for private conversational AI.

5. How can I choose a scalable AI agent framework for complex workflow management?

To choose a scalable AI agent framework for complex workflow management, focus on durability, state management, and multi-agent support. CrewAI, LangGraph, and LangChain are ideal options since they handle persistent memory, checkpointing, and long-running operations while giving you clear observability tools for debugging.

6. How can I choose an AI agent framework for customer support automation?

To choose an AI agent framework for customer support automation, start with Lindy or Rasa. Lindy is great for automating repetitive communication tasks through its no-code builder and integrations with CRMs and Slack. Rasa gives you total control and privacy for voice and multilingual chat assistants.

7. What are the best tools for collaborative AI agent development with version control?

The best tools for collaborative AI agent development with version control are LangSmith and AutoGen Studio. LangSmith is built into the LangChain ecosystem and lets teams trace and test workflows together.

AutoGen Studio adds a visual interface for debugging and sharing setups. FastAgency complements them with its CI testing framework for team-based development.

8. What are the top-rated voice AI agent frameworks for businesses?

The top-rated voice AI agent frameworks for businesses are Rasa, Lindy, and the OpenAI Responses API. Rasa provides deep dialogue and flow control for call or chat support. Lindy offers phone-ready agents with context memory, and the Responses API adds instant voice-to-text and speech synthesis capabilities.

9. Who offers the best tooling to trace multi-step agent workflows without a heavy setup burden?

The best tooling to trace multi-step agent workflows without a heavy setup burden comes from LangGraph and LangSmith. These tools let you visualize every state change, branch, and run in your workflow.

AutoGen’s OpenTelemetry integration also adds real-time tracing, giving full visibility with minimal configuration.

10. What is the best hosting option for LLM agents that require rapid function calls?

The best hosting option for LLM agents that require rapid function calls is cloud-based orchestration through AWS Lambda, Google Cloud Run, or LangChain Cloud.

These work smoothly with frameworks like CrewAI, FastAgency, and LangChain, keeping response times low and scaling automatically when workloads spike.

11. How does LangGraph compare to other AI agent frameworks?

LangGraph compares to other AI agent frameworks by offering deeper control over state, persistence, and long-running workflows.

While LangChain focuses on modular chains and CrewAI on orchestration, LangGraph specializes in durable execution, which is ideal for developers who want to control every step of an agent’s reasoning process.

12. What is the best lightweight agent security platform for mobile AI agents?

The best lightweight agent security platform for mobile AI agents includes Firebase Auth and Supabase, which work well with frameworks like Lindy and Rasa. Both handle authentication and encryption efficiently, while Rasa and Lindy ensure privacy through GDPR and SOC 2 compliance for mobile-friendly agent setups.

13. What open-source frameworks exist for voice agent development?

The open-source frameworks that exist for voice agent development include Rasa and Haystack Agents. Rasa’s latest update adds voice testing and real-time transcript inspection through Rasa Studio, while Haystack extends multimodal capabilities to support both text and voice workflows.

.avif)

.avif)

.png)

%20(1).png)

.png)