I tested more than a dozen LangChain-based projects and frameworks to see which ones actually handle real-world speed, data, and governance needs. Here are the best LangChain alternatives in 2026, organized by use case and performance.

8 LangChain Alternatives for Many Use Cases in 2026: TL;DR

When to consider a LangChain alternative

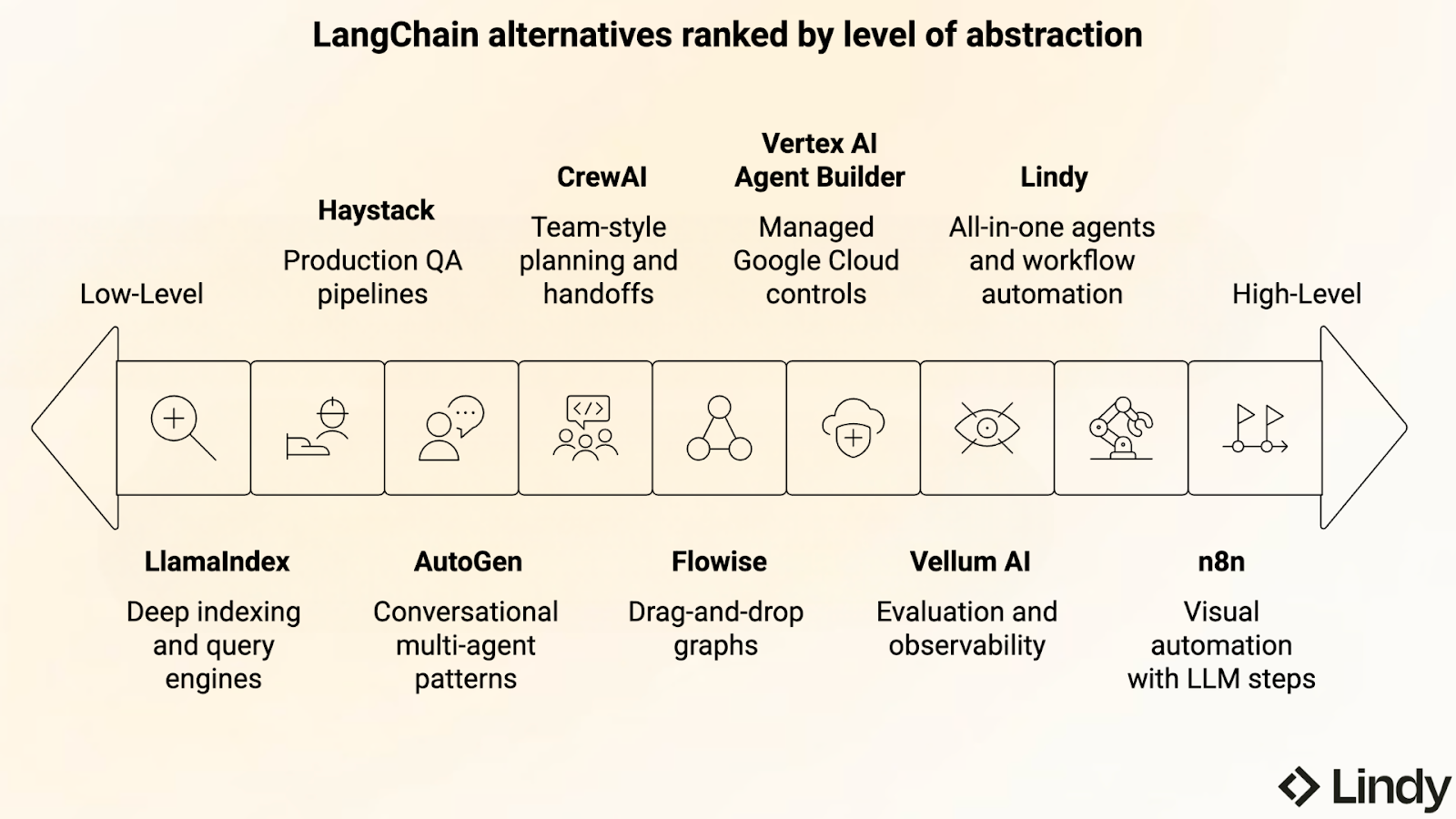

- You live in RAG land: If most work is retrieval over private data, move to a RAG-first stack built for indexing and evaluation. Start small, measure accuracy, then scale. Try LlamaIndex for deep indexing or Haystack for production QA pipelines.

- You need agents that work together: For systems with planners, critics, and tool users, use frameworks with built-in agent roles and routing. Sketch message flow first, then add tools. Try AutoGen or CrewAI for multi-agent coordination.

- Your team wants a visual builder: If ops need to ship workflows fast without Python, use a drag-and-drop builder. Prototype as a graph, share links for feedback. Try Flowise for quick prototypes, or n8n if you need a stable visual builder.

- Compliance or scale come first: If SSO, audit logs, and approvals block launches, choose managed environments with governance built in. Try Vertex AI Agent Builder or Vellum.

- Latency or cost are breaking UX: Long contexts slow everything down. Add caching, trim inputs, and route by model. LlamaIndex helps with retrieval tuning, while Vertex AI Agent Builder adds managed routing.

- You want faster time-to-value: If you care more about outcomes than custom chains, pick automation-first tools. Try Lindy for all-in-one agents or n8n for visual automations.

- Your team isn’t code-first: If problem solvers aren’t Python pros, use shared UIs for prompts and traces. Try Flowise for fast collaboration or Vellum for prompt management.

- You need clean ops in production: Prioritize tracing, rollback, and release hygiene. Vellum covers evaluation and lineage, while Haystack delivers stable pipelines.

8 Best LangChain alternatives in 2026

1. Lindy: Best alternative overall

What is it: Lindy is a no-code AI agent platform that helps businesses automate real work across sales, marketing, meetings, and customer support.

Choose Lindy over LangChain if: You want to build production-ready agents that actually do the work, not “frameworks” that demand weeks of wiring.

We built Lindy so teams could stop experimenting and start shipping. You don't need to know Python or chain logic. Just tell your agent what outcome you want, connect your apps, and it gets to work.

Sales teams use it to chase leads, marketing launches campaigns faster, and busy execs finally get a digital assistant that actually remembers context.

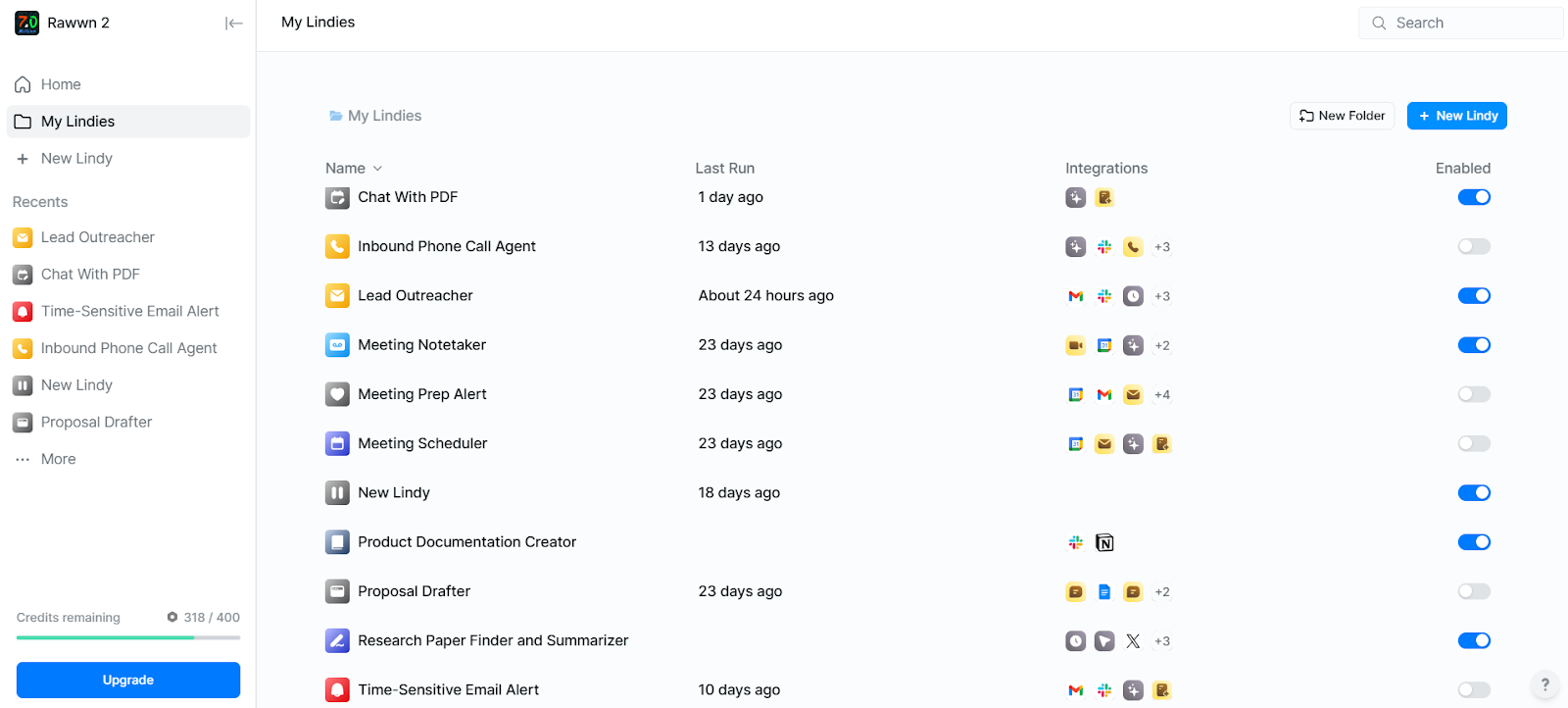

Lindy is a production environment for real workflows, not a sandbox for testing prompts. You can run multi-step automations, loop through data, review drafts before they go out, and connect everything from Slack to Notion in a few clicks.

Every workflow runs with complete visibility. You can trace every decision, review context, and jump in when human oversight matters.

When we tested Lindy internally, we threw it a week's worth of inbound support emails to triage and summarize. It took under 10 minutes to process 200 messages and hand back accurate summaries with suggested replies. That's the kind of result that sticks: less noise, more action.

Lindy connects to thousands of apps and fits naturally into your existing stack. It scales with your team, keeps your data private, and lets you choose the right AI model for each task.

Getting started is fast. Lindy Academy has step-by-step guides and pre-built templates for common workflows like sales outreach, customer support, and meeting notes. New teams can have their first automation running in minutes without building from scratch.

Pricing

Lindy starts with a free plan offering 40 tasks and 400 credits every month. Paid plans begin at $49.99/month (Pro) and scale up to $199.99/month (Business).

2. LlamaIndex: Best for data-centric tasks and RAG

What is it: LlamaIndex helps connect large language models with your private and structured data to build context-rich AI applications.

Choose LlamaIndex over LangChain if: Your workflows revolve around retrieval-augmented generation and you need dependable ways to parse, index, and query complex data.

LlamaIndex is made for people who spend more time wrangling data than writing prompts. It gives large language models a clean path to understand your files, spreadsheets, and internal systems, so answers come with real evidence instead of vague guesses.

You can pull in content from over a hundred sources, from PDFs and Word documents to Slack threads and Google Drive folders, and then decide exactly how that information should be indexed.

The framework feels polished and predictable. It lets you choose between vector, tree, keyword, or graph indexing depending on your use case, which makes it easy to tune for both small and very large datasets.

The query and chat engines power retrieval-augmented generation without needing extra code.

I tested it with a few hundred company reports, asking "Which quarter showed the largest margin drop?" LlamaIndex parsed the PDFs, built a structured index, and the answer came back in seconds, supported by the exact document references.

So, if your AI work depends on structured, document-heavy data and you want reliable retrieval, LlamaIndex gives you the control and transparency that LangChain often leaves to custom code.

It’s a solid choice for anyone serious about RAG-based applications.

Pricing

LlamaIndex offers a free plan. The paid plans start at $50/month (Starter) and $500/month (Professional).

3. Haystack: Best for production-grade RAG and AI pipelines

What is it: Haystack helps teams move from LLM prototypes to fully deployed, scalable applications.

Choose Haystack over LangChain if: You want to build retrieval-augmented or agent-based apps that can handle real-world use and production demands.

Haystack is a production-grade framework for building retrieval and agent pipelines that actually scale. It lets you connect different building blocks, such as retrievers, rankers, and generators, into clean, flexible pipelines.

These pipelines then power question answering, conversational agents, and even multi-modal applications combining text, images, and audio.

Haystack gives you plenty of freedom to mix what works best for your setup, since it integrates with AI models like OpenAI, Anthropic, and Mistral, and Pinecone or Weaviate for vector storage.

Many teams choose Haystack because it supports Kubernetes workflows, pipeline serialization, and monitoring tools that make debugging and scaling straightforward.

When you test it with a few thousand product manuals in a RAG setup, Haystack quickly retrieves the right snippets and generates accurate summaries with direct references to source documents.

For teams who prefer a visual approach, deepset Studio lets you design and test pipelines in a clean interface, saving time for developers who want to validate ideas before deploying them.

Haystack stays reliable whether you run it locally or in the cloud, backed by clear docs and a practical setup. It's a good fit for teams that want their AI applications to perform consistently in production without giving up the flexibility of open source.

Pricing

Pricing is not publicly available.

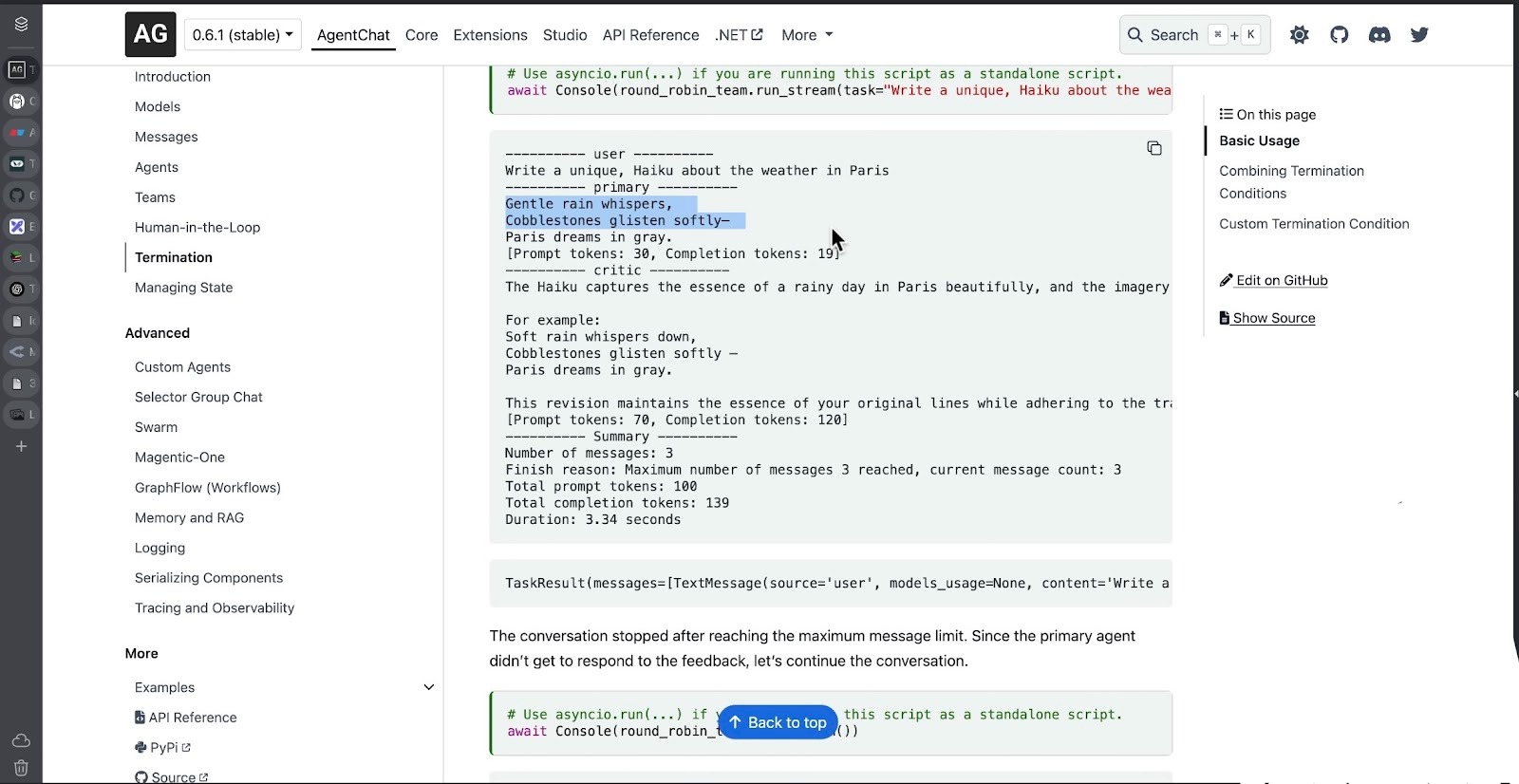

4. AutoGen: Best for multi-agent collaboration and research

What is it: AutoGen builds and coordinates multi-agent systems that can communicate, plan, and solve complex tasks together.

Choose AutoGen over LangChain if: You want fine-grained control over how AI agents interact, reason, and share work within a single framework.

AutoGen is a solid choice for anyone serious about exploring multi-agent collaboration. It lets you create networks of agents that communicate asynchronously, share context, and adapt their approach to each task.

You can define roles like planner, critic, executor, or researcher, then set how they talk to one another using event-driven messages or request–response patterns.

It’s not a visual builder, but that’s part of the appeal for technical teams.

Everything is modular and open, which means you can customize the memory, tools, and logic behind each agent.

You can set up two agents tasked with drafting a product summary and reviewing clarity, watch them debate in the logs until they land on something publishable, and see exactly how that back-and-forth exchange scales to harder problems.

AutoGen also has strong observability features, full type-checking, and built-in support for OpenTelemetry so you can monitor how agents behave over time.

It runs in both Python and .NET, integrates easily with external LLMs like GPT-4 and Claude, and even supports multimodal inputs such as text and images.

Pricing

AutoGen is open source and free to use under Microsoft Research’s license.

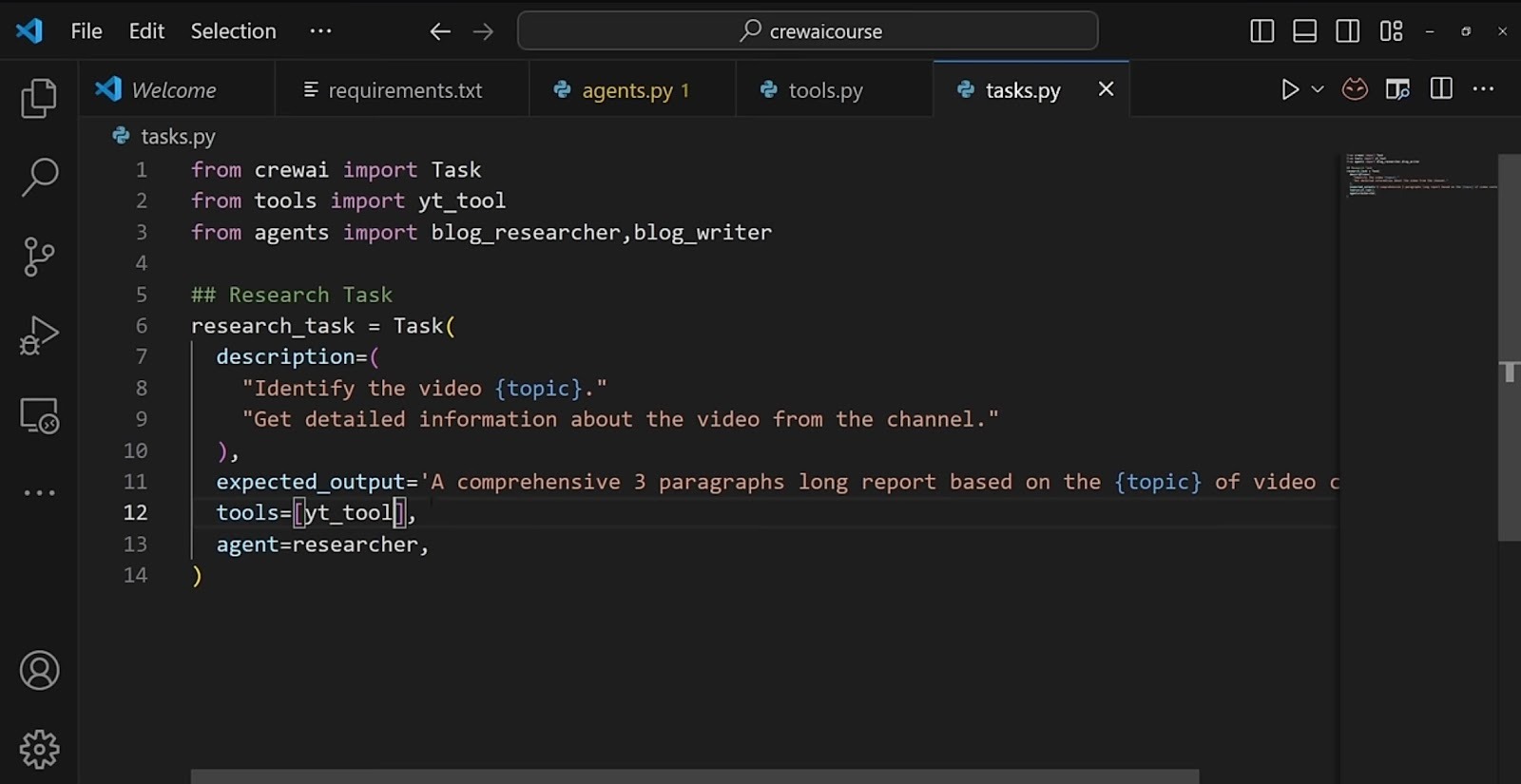

5. CrewAI: Best for team-based multi-agent collaboration

What is it: CrewAI helps organizations orchestrate, manage, and scale fleets of AI agents that work together on complex tasks from start to finish.

Choose CrewAI over LangChain if: You want coordinated agent teamwork across departments, not isolated chains or single-task scripts.

Instead of dealing with disconnected agents, CrewAI lets you build structured crews of AI agents. These collaborative agents then plan, reason, and execute tasks collectively.

You start by giving each agent a role. One focuses on research, another analyzes data, and a third turns the findings into something readable. They stay in sync through shared memory and coordination logic, which feels more like managing a small team than running scripts.

CrewAI connects easily with tools like Gmail, Salesforce, Notion, or HubSpot, so your agents can pull data, update records, or send results without switching contexts. Since decisions appear live through real-time tracing, I found it easy to overlook the agents and step in if needed.

In testing, a setup with one research agent, one outlining, and one editing for tone worked smoothly. After a quick optimization pass, the output noticeably improved with less cleanup and more clarity.

CrewAI’s serverless architecture makes deployment simple, whether you’re running locally or in the cloud. It supports role-based access and centralized monitoring, which helps large teams maintain visibility and control.

The human-in-the-loop training option also adds reassurance when you want agents to stay aligned with company guidelines before scaling to full automation.

Unlike LangChain, which provides the components to build agents, CrewAI focuses on managing and coordinating them. It turns multi-agent collaboration into a structured, reliable workflow ready for real business operations.

Pricing

CrewAI offers a free plan. Paid plans start at $99/month.

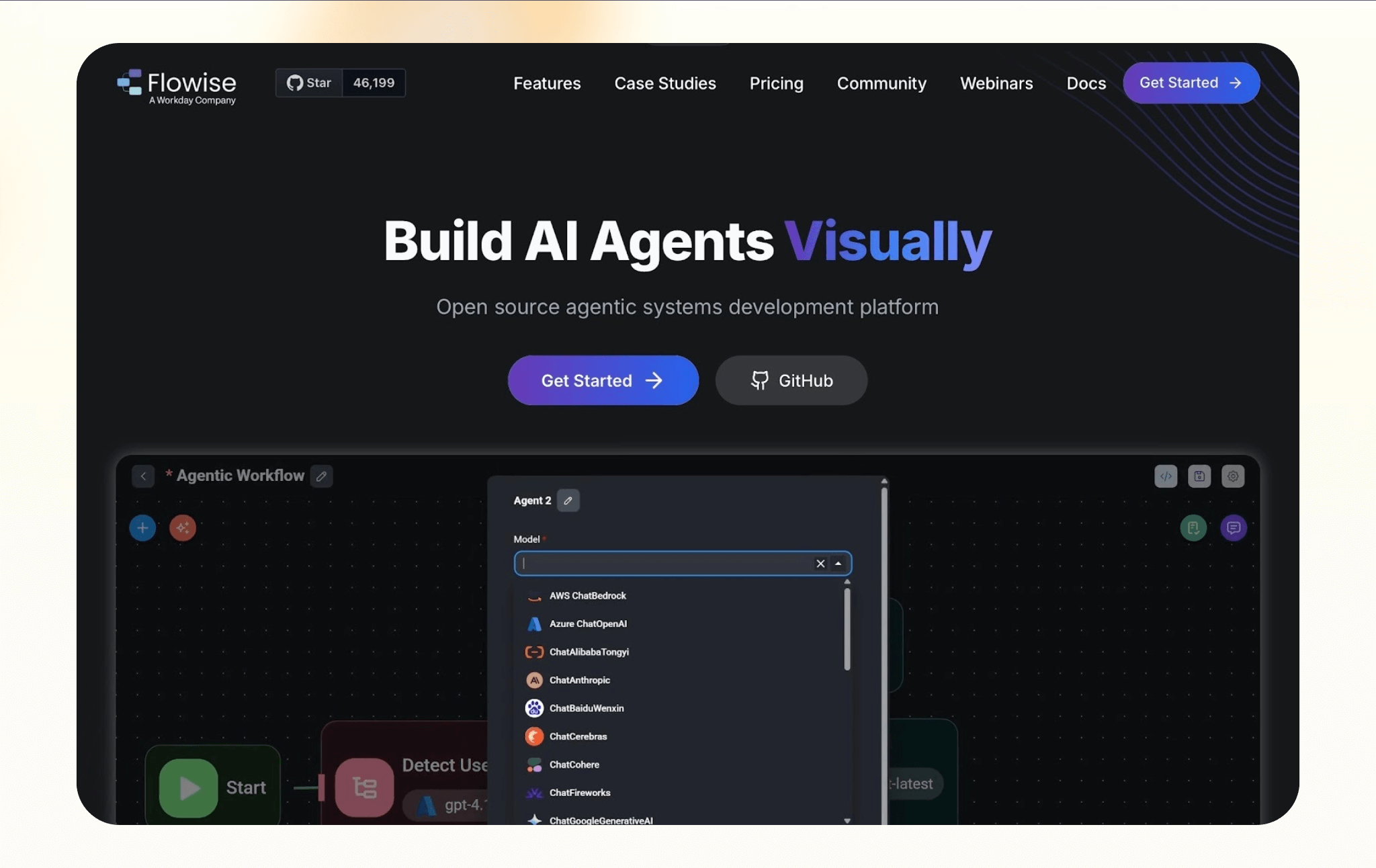

6. Flowise: Best for low-code and visual AI development

What is it: Flowise lets anyone design and deploy AI agents or LLM workflows visually, without needing deep programming knowledge.

Choose Flowise over LangChain if: You want to build, test, and scale AI workflows through an intuitive canvas that feels more like whiteboarding ideas than writing code.

Flowise brings clarity to the process of building AI systems. Instead of managing code blocks and debugging chains, you drag components into a visual canvas and watch how information flows in real time.

This makes it easy to map out how an assistant should think, retrieve data, or hand off tasks between tools.

Using Flowise, you can build everything from simple chatbots with knowledge retrieval to complex multi-agent systems with orchestrated workflows, all without writing a single line of code.

Someone like me, who can’t stand a cluttered interface, found the setup clean and easy to get the hang of. As a developer, you can test and iterate fast, export your workflow to production, and even scale to industrial applications once you've validated the approach.

When you test it with a retrieval workflow connecting a document index, memory node, and text generator, the entire pipeline comes together in under 10 minutes. The responses stay relevant, grounded in source material, and easy to trace visually afterward. Full execution traces support Prometheus and OpenTelemetry for production observability.

Flowise runs on your laptop, a private server, or enterprise cloud with support for 100+ LLMs, embeddings, and vector databases. You can scale horizontally with message queues and workers.

For teams building internal tools or customer-facing chatbots, this mix of visual simplicity and production-grade infrastructure makes Flowise a dependable foundation.

Pricing

Flowise offers a free plan. The Starter Plan starts at $35/month, and the Pro Plan starts at $65/month.

7. Vertex AI Agent Builder: Best for enterprise and cloud-native AI agents

What is it: Vertex AI Agent Builder is a cloud-based platform from Google that lets developers and enterprises build, train, and deploy intelligent multi-agent systems.

Choose Vertex AI Agent Builder over LangChain if: You want a managed, production-ready environment with enterprise reliability, strong integrations, and smooth scaling inside Google Cloud.

Vertex AI Agent Builder brings together everything a business needs to move from experimentation to real deployment.

It gives developers the flexibility to code agents using the Agent Development Kit (ADK) while also offering low-code tools for faster builds.

You can start small with a simple conversational agent or connect multiple agents through the Agent2Agent protocol to collaborate across systems securely.

The framework supports grounding responses in real data from Google Enterprise Search or BigQuery, so the outputs remain factual and context-aware.

During a quick test, I connected a demo agent to a company knowledge base and asked it, “Summarize recent sales insights.”

Within seconds, it retrieved figures directly from BigQuery and delivered a clear summary that matched the dataset exactly. The human-like, multimodal interactions with bidirectional audio and video streaming make agents feel more natural in customer-facing roles.

Agents can tap into 100+ pre-built connectors to enterprise systems like ERP, procurement, and HR platforms, plus custom APIs managed through Apigee.

Agent Engine handles infrastructure, scaling, security, and monitoring so you focus on capabilities.

Combined with Google Cloud's security controls, content filters, and guardrails, Vertex AI Agent Builder offers a complete environment for building reliable, scalable AI agents at production scale.

Pricing

Pricing is pay-as-you-go.

8. Vellum: Best for enterprise-grade AI development and governance

What is it: Vellum helps teams design, test, and deploy production-ready AI systems with proper evaluation, monitoring, and control built in from the start.

Choose Vellum over LangChain if: You want a single workspace to experiment, measure, and manage AI products without losing track of what performs best.

Think of Vellum as an operating system for AI product teams. Everything from prompt iteration to deployment happens in one environment, so experiments stay organized and traceable.

You can run side-by-side evaluations across models, monitor results in real time, and promote winning versions straight to production without breaking what's live.

It fits into the team workflow naturally. Engineers can tweak models and prompts, while product managers can review output quality without digging through code.

You can build agents with plain English and see full visibility into every execution step, reasoning path, and tool call.

When you set up a chatbot workflow using Vellum's built-in evaluation tools, it logs accuracy scores and improvement trends after each run, helping you see progress rather than guess it.

Once in production, Vellum tracks performance and usage automatically, flagging drift or latency issues before they become real problems. Version control and isolated environments let you iterate safely.

Vellum ships to APIs, SDKs, and embedded widgets so you can deploy agents however your stack requires. The platform is built for teams that view AI as a core product capability, not a side project.

Pricing

Vellum offers a free plan. The paid plans start at $25/month (Pro) and $79/user/month (Business).

{{cta}}

Try Lindy: An AI assistant that handles support, outreach, and automation

Lindy uses conversational AI that handles not just chat, but also lead gen, meeting notes, and customer support. It handles requests instantly and adapts to user intent with accurate replies.

Here's how Lindy goes the extra mile:

- Fast replies in your support inbox: Lindy answers customer queries in seconds, reducing wait times and missed messages.

- 24/7 agent availability for async teams: You can set Lindy agents to run 24/7 for round-the-clock support, perfect for async workflows or round-the-clock coverage.

- Support in 30+ languages: Lindy’s phone agents support over 30 languages, letting your team handle calls in new regions.

- Add Lindy to your site: Add Lindy to your site with a simple code snippet, instantly helping visitors get answers without leaving your site.

- Integrates with your tools: Lindy integrates with tools like Stripe and Intercom, helping you connect your workflows without extra setup.

- Handles high-volume requests without slowdown: Lindy handles any volume of requests and even teams up with other instances to tackle the most demanding scenarios.

- Lindy does more than chat: There’s a huge variety of Lindy automations, from content creation to coding. Check out the full Lindy templates list.

Try Lindy free and automate your first 40 tasks today.

FAQs

1. What is LangChain?

LangChain is an open-source framework for building applications powered by large language models. It provides tools to connect LLMs with external data, APIs, and memory so they can reason, plan, and complete multi-step tasks.

In short, LangChain helps developers move from simple prompts to full-fledged AI applications that can interact with the real world.

2. What are the use cases of LangChain?

LangChain is mainly used for creating chatbots, retrieval-augmented generation (RAG) systems, document summarization tools, and data analysis assistants. Developers often rely on it to build agents that can call external tools, reference private knowledge bases, and handle multi-step workflows.

It’s also popular for integrating AI into existing business applications such as CRMs, support systems, and content platforms.

3. How to use LangChain for your business?

You can use LangChain to automate parts of your operations where natural language understanding adds value. For example, it can power customer service bots that pull data from your internal documents, or research assistants that summarize and organize reports.

The setup involves selecting a language model, defining prompts, connecting to your data sources, and configuring memory or retrieval modules. Once these are in place, LangChain helps you orchestrate workflows that respond dynamically to user input or business data.

4. What is better than LangChain?

Lindy is better than LangChain if your goal is to deploy production-ready agents without managing code-heavy frameworks. While LangChain is a developer-first toolkit, Lindy focuses on usability and business outcomes.

It lets teams automate workflows directly through natural language prompts, connect to thousands of tools, and monitor results in a secure, no-code environment.

5. Is LangChain a Python library?

Yes, LangChain is primarily a Python library, though it also supports JavaScript. The Python version remains the most widely used, offering rich support for data connections, model integrations, and workflow orchestration.

.avif)

.avif)

.png)

%20(1).png)

.png)